We can't find the internet

Attempting to reconnect

Something went wrong!

Hang in there while we get back on track

Mon, June 03, 2024

Open-source LXD / Incus image server

It's no secret that we use LXD as the main hypervisor for managing containers. It's simpler and has a more batteries-included mindset than Kubernetes.

With LXD our clusters are lean, performant and efficient. Clusters could be bootstrapped and ready to run apps in just a few minutes. Our experience with LXD is wonderful, and we are really happy with it.

But in July 2023, Canonical took over the LXD project from the Linux container community. This action caused a chain of events that led us to decide that we needed our own image server.

Why we considered building our own image server in the first place

There are several reasons why we considered building our own image server after Canonical took over the project.

One reason was that we wanted to be in control of what images were being served in Opsmaru.

We also wanted to be able to control when images got deprecated / removed from circulation.

However, these reasons alone weren't enough for us to accelerate the development of our own image server. There were going to be costs in time and other resources if we decided to do this, and that was precious time and resources we could use for building other things we were prioritizing.

Our first dead end

After Canonical took over the LXD project, a new fork of LXD was created called Incus. Incus was there to fill the void left behind by LXD in the Linux container project portfolio.

Then, the leader of the Linux container project announced in December 2023 that all LXD users would lose access to the community image server by May 2024.

Our initial reaction to this was, "Well, Incus is a fork of LXD so we can just replace LXD with Incus in our setup". We figured this was going to be the path forward for us.

However, after trying this out we hit a few challenges.

Incus is its own project and its contributors have their own plans for it. That makes sense and it's normal. But it does mean that a project can diverge from what you need as a user.

One of the key features we require (fan networking) was removed from Incus. Fan networking provides cross node networking for containers (i.e. containers on different nodes in the cluster can see each other). This was one of the features that attracted us to LXD actually, since we got it for free without any extra provisioning. It made the cluster simple to set up.

As it turns out, our terraform module that provisions LXD clusters requires fan networking. There's no getting around that.

Someone recommended OVN to us as an alternative, but that required extra provisioning and set up. It would add to the complexity and we didn't want to do that.

So Incus was out.

Our second dead end

On Jan 10th 2024, Canonical announced that they were working on their own image server to replace the community server. "Great!", we thought.

Many members in the forum asked them for timelines and while Canonical did respond, the information was quite sparse and underwhelming.

We waited a while.

At this point, we had known about the cliff coming in May for a few weeks.

We knew this would be an existential problem for us and probably for others too. It's hard to express how it feels to be waiting for someone to reply when your entire business hinges on their answer.

We waited some more.

No new information was coming out. The lack of information meant we couldn't plan.

Meanwhile, the cliff kept getting bigger. What if Canonical didn't come through? Or what if they came through, but it didn't work for us?

If that happened, we'd have to build something ourselves at the last minute. And we'd have to dive into building it head first, without knowing exactly what kind of project we'd be getting into.

After a couple more weeks of radio silence, we decided we didn't want to try our luck with wait and see any more.

After all, Canonical's priority is not our priority. For us the image server represented a foundational piece in our infrastructure. Booting up new clusters required the image server. Our business would be dead before the summer without a replacement for the community image server.

There's no handbook for building an image server

Given the time sensitive nature of the problem, we needed to get this image server done and out of the way as soon as we could. We needed an MVP, something we could get up and running in a few weeks. We began scouring GitHub and the LXD forums to find clues for how we would go about building the image server.

We figured out that the image server consists of a few key features:

- Some kind of CI to build the images.

- Some mechanic to host the built images.

- Some server endpoint to publish JSON in simplestreams format.

There wasn't much documentation in regards to how the image server could be built. And it wasn't clear exactly how long the whole process was going to take.

We catch a break

However, thankfully, the Linux container community open-sourced their tooling called distrobuilder and their CI configuration.

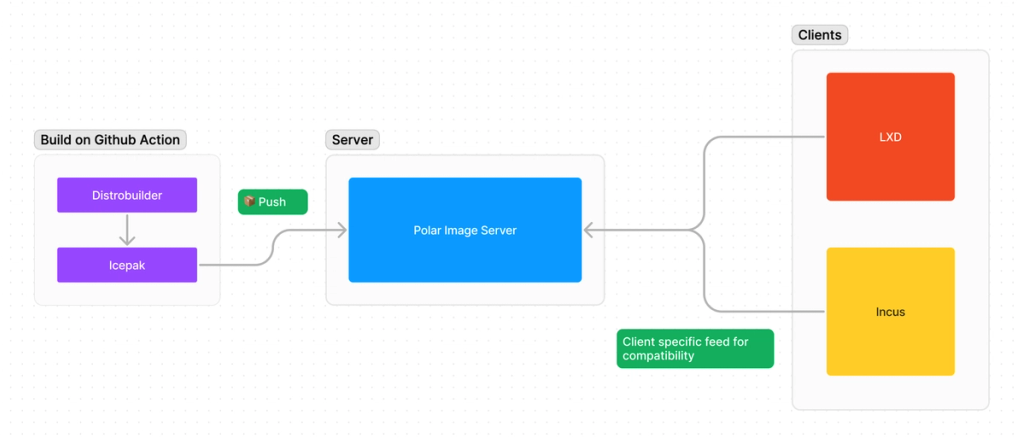

After a bit of tinkering and thinking, we came up with a simple architecture that we were confident could be up and running in just a few weeks:

We started building on 12th Feb 2024 and we got our sandbox MVP server up and running on the 21st Feb 2024.

Our plan worked.

It's pretty amazing to look back at it and realize we built it in 9 days. It's crazy how fast you can build something when you really need to.

In this timespan we built a GitHub action called Icepak and our image server called Polar. We also recently got our production server up and running.

We're happy to announce that we've already shifted to using the image server as the default for our OpsMaru service.

One last thing: Performant image distribution

To have an effective image server we would also need to put a CDN in front of it. After all, we don't want to be serving large files from our origin bucket or our image server.

To do this, we configured our image server to use a CDN. The way this works is we let the lxd / incus client request the file from our image server. We then simply do a 302 redirect to the CDN.

If you would like to see more about how this works, you can check out the code.

And that was that

We finished it. We still have some plans for expanding Polar, but it's up and working. And we're relieved and happy to share it.

Show me the Code

If you're interested in seeing more and getting the source code to our image server, you can access them below. ⭐ Be sure to star our repository to show your support.

Image Server Access

If you'd like to get access to the image server you can do so by heading over to https://images.opsmaru.com. You'll be able to sign up and get your own personal token you can use with your own LXD / Incus clusters.

Final Thoughts

There is a lot more we can do with the image server. We do plan on developing it and making it better. We also want to make it extremely easy for people to host / run their own image servers.

Overall, we're extremely happy with the outcome. We solved our own problem, which always feels great.

We also know that many other people may be in the same situation as we were, so we hope that the image server will provide positive value to the LXD / Incus community. We hope you like it!